AI tool that replaced a team of web researchers

«Notably, the data has been impressively clean for a dataset of its size... The files were immediately usable and easy to review. Many thanks to you and the

Date

«Notably, the data has been impressively clean for a dataset of its size... The files were immediately usable and easy to review. Many thanks to you and the dev team for the strong work and careful QA here» - Scott Kluth, Founder and CEO.

Our ongoing client — Coupon Cabin, a well-established U.S. cashback company — reached out to us knowing our track record in applying AI to business automation. Their marketing department relies on a constant flow of structured merchant data, including links to policies and social media, summaries of shipping, return, and cancellation policies, and more.

However, these days merchant websites vary dramatically in structure and content. Manual data collection across merchant websites is quite time-consuming and costly — so much so that the client maintained a dedicated internal team for it.

It had become clear to the client that this approach was not sustainable: they were spending excessive time and money on repetitive, low-value manual work: it takes about two weeks for the team to go through all the stores and get the data, but then they have to immediately do the next round to keep the data relevant. Besides, sometimes employees naturally might miss some details.

A traditional solution to scale this would be to hire more people to do that, but new employees require some training and more management, which boils up to more cost in money and time. As the number of merchants kept growing, expanding coverage using a human-only process became increasingly unrealistic — the workload was scaling much faster than the team ever could. Automation was the only viable way to reduce operational costs and scale data coverage efficiently.

At the same time, the client needed to ensure tight control over AI-related costs — especially the volume of tokens consumed by large language model calls — so the automation itself remained economically viable.

The client’s goal was to automate the merchant data collection pipeline as much as possible, drastically reducing manual work.

Solution

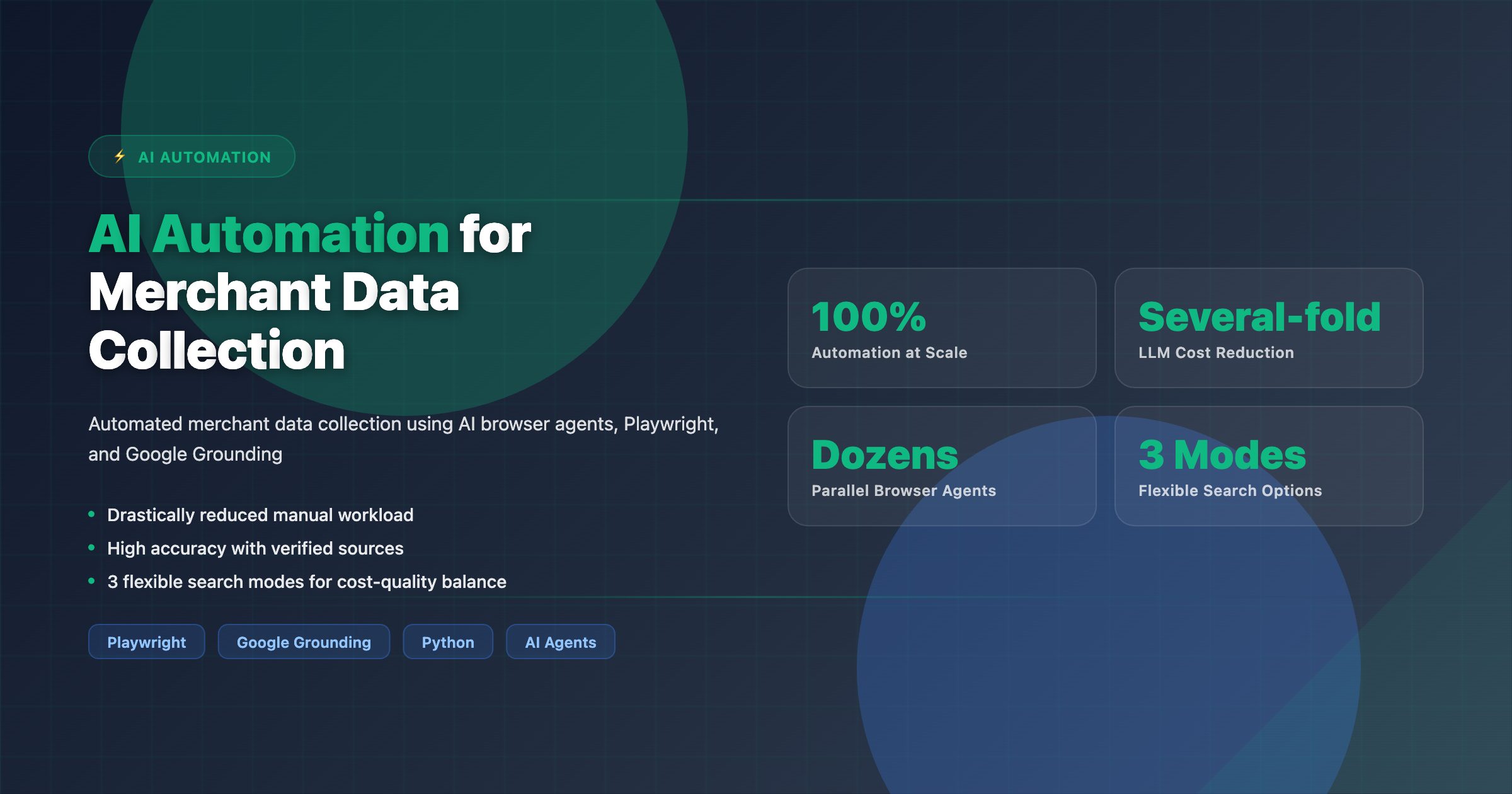

We built a tool that combines AI browser agents, Playwright automation, and Google Grounding to collect and structure key merchant information — fast, reliably, and with verified sources.

The system automatically identifies and analyzes pages on merchant websites, extracts key details, summarizes textual content, and returns all data in a normalized JSON format, ready for integration into analytics systems, databases, or LLM pipelines.

The tool supports 3 search AI modes depending on client’s preference:

Fast and cheap (a bit lower quality of results);

Balanced (takes a bit longer, results are 10% better)

Highest quality of results (takes long to run the search, costliest of the 3 options, improved quality of results by 10% more).

Challenges

- High LLM cost and low speed Each agent step required processing DOM, screenshots, and large context windows — making requests token-intensive and slow. Solution: Google Grounding integration as a lightweight data retrieval path and apply strict prompt/context optimization — reducing LLM costs several-fold.

- Heavy browser infrastructure Running dozens of agents in parallel demanded robust orchestration, profile isolation, and resource control. Solution: A Python-based orchestrator with worker pools, user-data profile management, and automatic session reinitialization to prevent memory leaks and rendering hangs.

- Anti-bot protection bypass Merchant websites frequently use Cloudflare, hCaptcha, and reCAPTCHA to block automation. Solution: Middleware for CAPTCHA detection plus 2Captcha background solving, proxy rotation, and fallback reruns for uninterrupted workflows.

- Grounding and JSON output The Grounding tool doesn’t support return of structured JSON with sources. Solution: A two-step conversion flow — first fetch Markdown with citations via Grounding, then convert it to JSON in a second model call.

- Pipeline stability and async execution Dozens of agents and steps increased the risk of partial failures and made it difficult to trace where exactly things broke. Solution: Fine-grained logging, task statuses, and retry logic at both the agent and orchestrator level, ensuring resilience and recoverability.

Business Value

-

Automation at scale: Transforms a complex, multi-step data collection process into a repeatable, fully automated pipeline.

-

Reduced manual workload: Frees up the client’s in-house team from tedious data extraction tasks.

-

High accuracy & transparency: Every extracted data point is tied to a verified source; selection criteria can be flexibly configured.

-

Scalability: The orchestrator manages dozens of parallel browser profiles and agents, supporting new data types without compromising performance.

-

Flexibility in balancing cost, time and quality of results.

«Thanks again for all the work on this project. We see a lot of promise in the output and are excited about moving this data live» - Scott Kluth, Founder and CEO.